Apache Cassandra 0.5.0 was released over the weekend, four months after 0.4. (Upgrade notes; full changelog.) We're excited about releasing 0.5 because it makes life even better for people using Cassandra as their primary data source -- as opposed to a replica, possibly denormalized, of data that exists somewhere else.

The Cassandra distributed database has always had a commitlog to provide durable writes, and in 0.4 we added an option to waiting for commitlog sync before acknowledging writes, for cases where even a few seconds of potential data loss was not an option. But what if a node goes down temporarily? 0.5 adds proactive repair, what Dynamo calls "anti-entropy," to synchronize any updates Hinted Handoff or read repair didn't catch across all replicas for a given piece of data.

0.5 also adds load balancing and significantly improves bootstrap (adding nodes to a running cluster). We've also been busy adding documentation on operations in production and system internals.

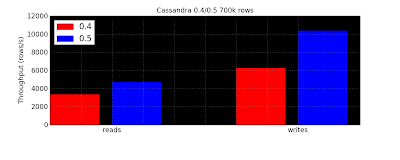

Finally, in 0.5 we've improved concurrency across the board, improving insert speed by over 50% on the stress.py benchmark (from contrib/) on a relatively modest 4-core system with 2GB of ram. We've also added a [row] key cache, enabling similar relative improvements in reads:

(You will note that unlike most systems, Cassandra reads are usually slower than writes. 0.6 will narrow this gap with full row caching and mmap'd I/O, but fundamentally we think optimizing for writes is the right thing to do since writes have always been harder to scale.)

(You will note that unlike most systems, Cassandra reads are usually slower than writes. 0.6 will narrow this gap with full row caching and mmap'd I/O, but fundamentally we think optimizing for writes is the right thing to do since writes have always been harder to scale.)

Log replay, flush, compaction, and range queries are also faster.

0.5 also brings new tools, including JSON-based data export and import, an improved command-line interface, and new JMX metrics.

One final note: like all distributed systems, Cassandra is designed to maximize throughput when under load from many clients. Benchmarking with a single thread or a small handful will not give you numbers representative of production (unless you only ever have four or five users at a time in production, I suppose). Please don't ask "why is Cassandra so slow" and offer up a single-threaded benchmark as evidence; that makes me sad inside. Here's 1000 words:

(Thanks to Brandon Williams for the graphs.)

Comments

I would be really interested to know what is the cluster size of the cluster you have been using for the benchmarks. It would give a better understanding of the throughput/sec.

And how many threads have been used in the first graph and was the experiment really only executed on one single node?

The second graph is a 4 node cluster running with a replication factor of 3 (that is, each write is replicated to 3 nodes), so you'd expect throughput to be about 30% more than the single node case, minus some overhead for dealing with the network, and that's what happens.